Neon, an independent startup backed by Samsung, showed humanlike computer agents at CES 2020. These digital avatars follow commands, make facial expressions, and can converse with a real human in different languages. Neon calls them artificial humans or NEONs. These are, however, not humanlike physical robots; they are merely realistic, AI-powered 2D avatars displayed on monitors.

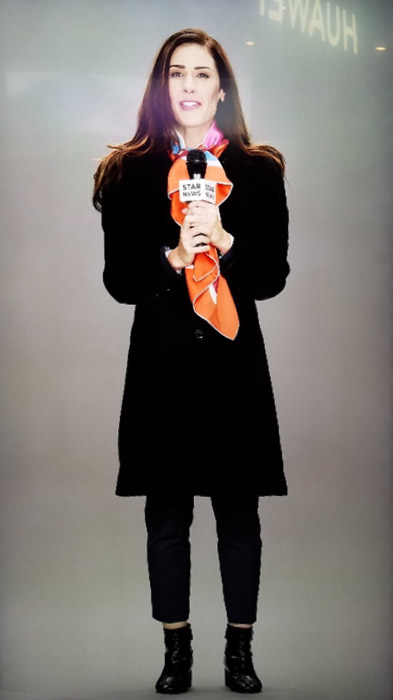

A NEON posing as a TV reporter

Neon’s CEO Pranav Mistry mentioned in his CES keynote speech that his vision is to make the interaction with machines more natural and humanlike. He shared a vision of NEONs having emotions like a real human. According to Mistry, NEONs will be different from Siri and Alexa, which are robotic in nature.

Mistry cited “Core R3” as Neon’s driving force, without going into details about what is included in it. But from his descriptions it can be inferred that Core R3 is a neural network-based AI that can continue learning after instantiation. Although a NEON’s appearance was realistic, its voice was not as natural, possibly because of bad internet connection and a lot of ambient noise on the show floor. NEONs can speak in many languages. A cloud-based natural language processing (NLP) algorithm, which was not developed by Neon, was used for the demo. Mistry thinks that the voice and language skills of NEONs will improve with the advancement of NLP technologies in the market.

The NEONs displayed on the show floor had the likeness of Neon employees and their friends; appearance of those NEONs were not AI generated. During a demo, one Neon employee’s life-size digital avatar was being manipulated by another employee, while the real person was standing in the audience. The audience seemed to enjoy this. Mistry pointed out that generating fake avatars was not the focus of Neon, although it seems that an artificially generated face can be used in the future.

Mistry claimed that the entire demo and the NEONs shown were created only in four months.

It seems that a killer application for NEONs has not been envisioned yet. Mistry referred to hotel concierge, yoga instructor, translator, and personal assistant as use cases and examples throughout his presentation. Since none of these applications requires the high emotional intelligence that a NEON supposedly possesses, it is unclear if the differentiation is a big enough factor to dethrone other established chatbots in the market.

One important use case that comes to mind, however – if NEON is successful in delivering its vision – is in the area of media content generation. If NEONs are fully controllable and capable of showing wide spectrum of emotions, they could be used instead of real human actors. For example, if a newscaster is digitized into a NEON, that digital bot can deliver any piece of news 24/7 with familiar and appropriate facial expressions and emotions. It would also be possible to maintain a few NEON versions of the same newscaster with subtle differences to target different segments of news consumers. Similarly, one can argue that any piece of media content requiring human actors could use these hyper-realistic NEONs.

Neon is still far away from offering a product in the market. But if Neon ultimately delivers its vision of emotionally aware, controllable, trainable and realistic digital avatars, it could have noticeable impact on the media industry.