Henry Cooke leads the Talking with Machines project at BBC R&D. Henry will join our next webcast “Broadcasting in the Age of Alexa” on November 1 at 2pm E.T. You can register for the webcast here.

This post was originally published on the BBC Research & Development blog.

By Henry Cooke, Senior Producer & Creative Technologist, BBC R&D

In BBC R&D, we’ve been running a project called Talking with Machines which aims to understand how to design and build software and experiences for voice-driven devices – things like Amazon Alexa, Google Home and so on. The project has two main strands: a practical strand, which builds working software in order to understand the platforms, and a design research strand, which aims to devise a user experience language, set of design patterns and general approach to creating voice interfaces (VUI), independent of any particular platform or device.

This is the first of two posts about our design research work. In this post, we’ll talk about a prototyping methodology we’ve been developing for VUI. In the next, we’ll outline some of our key findings from doing the work.

Image above by facing-my-life on Flickr, cc licence.

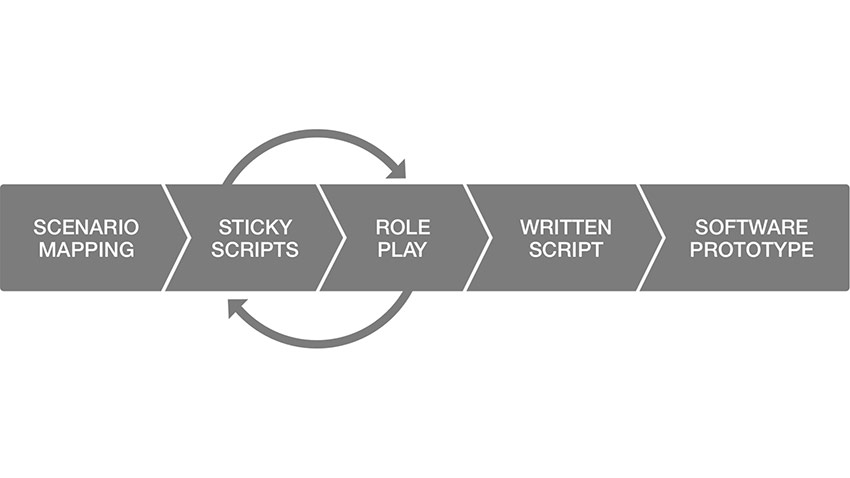

We devised a prototyping process in 2016, while starting our design research – we needed to imagine some possible use cases for voice interfaces which were grounded enough in reality to be useful. Earlier this year, we were ready to try this process out on some real use cases. At about the same time, our colleagues in BBC Children’s got in touch and asked if we could help them do some thinking about VUI. This seemed like a nice piece of serendipity, so we decided to collaborate on some VUI experience prototypes together using Children’s expertise, content and audience needs to drive another iteration of our process.

Hear more from Henry in our voice interactivity webcast

What makes a prototype?

For our purposes, a prototype can be anything from a script for humans playing the parts of a machine and a user through to a fully-realised piece of software running on an Alexa or a Home. The point of a prototype is to communicate how a particular idea for an experience might work, and to test that idea. It might be that we only want to test a small part of an idea, or quickly try out something we’re not sure about. It might be that, in developing an idea from sticky notes to script, we decide that it really doesn’t work – all of these outcomes are totally valid. We try only to develop ideas as far as we need to prove whether they work or not, and it might be that we can prove that with a fairly rough, undeveloped prototype. Not everything needs working up in software to test its validity.

Collaboration

For our prototyping work with Children’s, we were dead set that we should have a highly collaborative process. Our earlier work on VUI prototyping had taught us that you can’t design these kinds of experience in discipline-defined bubbles; you need to have technical, editorial and UX people working together from the beginning to understand the shape and flow of an experience. Our experience is that VUI design happens at the point where these three skillsets overlap.

Not only does this mean that the most effective VUI design happens in multi-disciplinary teams, but it also means that you should be collaborative by default. We make all our artefacts (storyboards, scripts etc) shared from the start – either through shared file services or shared document services. This way, all of the team can see how the thinking is shaping up and contribute ideas.

The Process

Scenario Mapping

We devised the scenario mapping technique as a way to think about the kinds of situations someone might be in when they use a voice-driven device. Scenarios come before specific ideas for applications – they’re a way to think about the reasons someone might have to use a VUI, and what kind of context they and the device will be in when they’re using an application. From one scenario, you might come up with a few different ideas for applications.

Thinking about scenario – the setting of an experience – is especially important for VUI, since voice-driven devices, even more so than mobile, are used in someone’s existing social situation and surroundings. You can’t assume you’ve got a little bubble of someone’s undivided attention.

Additionally, thinking in detail about the context of an application’s use before thinking about any specific application will enable you to write a script and design a flow which fit neatly into that context and so design a better VUI!

Scenario mapping is particularly useful in an R&D context where you might not have much data about existing use cases – you might be designing for a new or hypothetical technology – and you need to devise some plausible, useful scenarios.

Categories

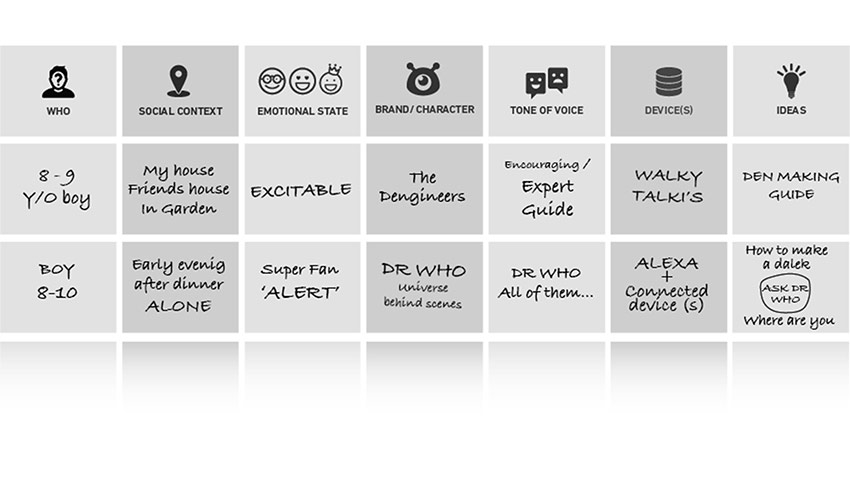

We build scenarios from ingredients in a number of categories. For our collaboration with Children’s, our categories were:

- Who: Someone (or a group of people) using a VUI.

- Social context: Are they at home? At school? In a car?

- Emotional state: Are they inquisitive? Tired? Task-focussed?

- Brand / Character: Who is the user talking to? What kind of content?

- Tone of voice: Peppy? Sensitive? Teacher-like?

- Device(s): Amazon Echo? An in-car system? A smart radio?

Categories are flexible though, and should be tweaked for the needs of any given project. We prepared these categories in advance of our first workshop.

Ingredients

Once we’d identified our categories, we needed to populate them with concrete examples of things – ingredients. These ingredients are then used to build scenarios. Working in pairs, we wrote as many ingredients down on sticky notes as we could – “4 year old boys” for who, for example, or “excitable” for tone of voice. At this point, you’re going for quantity rather than quality.

Scenario building

When you’ve got plenty of ingredients, you can then start combining them into scenarios.

Using the categories as column headings, stick ingredients in rows underneath them that sound like plausible settings for a VUI application.

Application ideas

Once you’ve built a few scenarios, pick a few that look promising and see if they prompt any ideas for specific applications. We often find that ideas come along while you’re building the scenarios – that’s fine too, make sure to note them down as you go along.

By the end of the scenario building process, you should have some ideas for applications which you want to work up into prototypes.

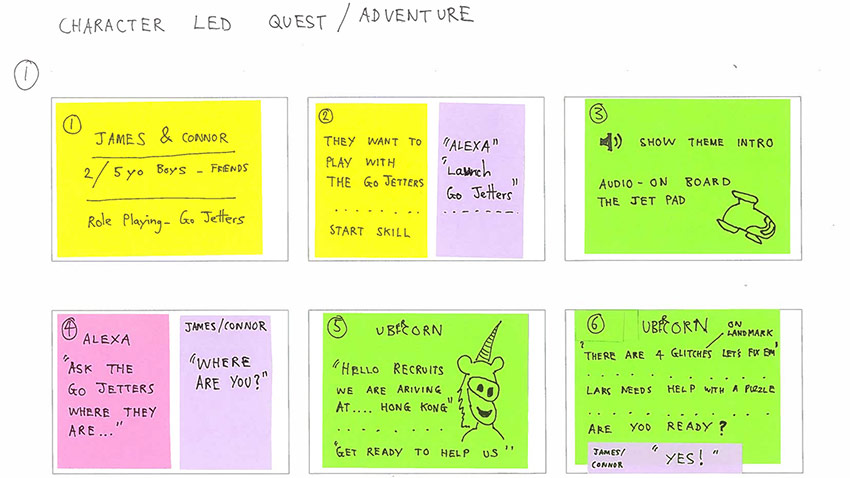

Sticky Scripts

The first step we take when sketching out a voice experience is to map out a script with sticky notes. We draw out speech bubbles on a note each for everything said by a person or machine, and colour code the notes for each voice. We also add notes for actions into the flow – for instance, a machine looking up some data, or a person adding ingredients to a recipe. These actions are also colour-coded by actor. You could also add notes for multimodal events – things like information appearing on the screens of nearby devices, or media being played on a TV.

Sketching out a voice-led experience like this is a really quick, useful way to see how its flow and onboarding will work, and which bits seem uneven or need more unpicking. Using sticky notes means that it’s really easy to add and remove events or re-order sections where needed. Using colour-coded sticky notes means you can easily see if there are parts of a flow where the machine is saying too much, or asking too many questions.

Role Playing

The single most useful thing we’ve done while prototyping VUI is to role-play out an idea for an experience. Like prototyping an idea for an app or website in code, having a ‘working’ version of your idea which you can test on someone immediately gives you a feel for what works and what doesn’t in a way that static words on a page just don’t convey. Your end experience will be voice-driven, so it makes sense to test in voice as early as possible!

We role-played VUI ideas in teams with each person reading from the sticky-note scripts:

- System voice

- Triggered sound effects

- Quick props (e.g paper sketches of things happening on a tablet screen)

- Other machine roles

- For example, making queries against data sources or running scripts. Use your categories of sticky notes as a guide.

- User (who doesn’t get to see the script!)

If you can, try to recruit someone to act as the user who isn’t already familiar with the idea – they should be ‘using’ your prototype in as real a way as possible. This will enable you to get early feedback and observations on how the experience flows and any pressure points or parts where the ‘user’ feels lost or unclear about what’s happening.

It can also be very useful to recruit an observer – someone else who isn’t familiar with the idea, whose job is just to watch, take notes and observe pauses, hesitations or problems with the experience.

You can then use the results of the role-play to drive iterations of your sticky-script.

Blind role playing

For the best possible results, you can prevent the person playing the user role from seeing the other actors by putting them behind a partition, or on the other end of a phone line. This really forces you to check that the script is adequate to inform the user what is going on. The benefits for this for VUI, include not being influenced (both actors and users) by non-verbal cues.

Written Script

The next step in our process is a written script of an experience – this is probably more useful for narrative experience than data-driven ones, although reading through a complete script with an unfamiliar ‘user’ is a useful prototyping and testing technique in itself.

The lo-fi, sticky script writing will help to map the shape and flow of your experience, but after a few iterations you’ll probably hit a point where you’ll need to flesh out the experience and figure out the detail. We do this by writing a script, similar to the script for a play or radio drama. Every word said by a machine, and approximations of what you think people might say during an experience should be part of this script – branches can be used from page to page, like an adventure gamebook, for more complex flows.

This written script, in and of itself is a good prototype – it can be used by a facilitator in a user-testing situation to run a detailed experience prototype, with participants taking the role of user. Essentially, a slightly more formalised version of the role playing step used internally by the team while developing the script.

It might be that some ideas don’t need to (or can’t) go any further than a script – maybe an idea is too complex or costly to build in software. This is fine – you can still test a script with users using the role-playing technique and gain valuable insight. Maybe some parts of a more complex idea could be spun off into a more plausible software prototype, or learnings could inform other projects.

Shared document

We have found it very useful to make our scripts shared documents from the start – this goes hand in hand with the importance of collaboration across disciplines from the start of a prototyping project. Having editorial, UX and development eyes on the script as it develops will help to iron out any problems as they arise, and given the observation that good VUI design happens in the intersection between those skillsets, it’s very helpful for authorship of a script to be shared between people who combine those skills.

Running the script through with humans and machines

This may sound obvious, but it’s important to keep saying the script out loud as it develops – some things which look fine on the page sound strange when said out loud, and hearing it back helps get a feel for the cadence of a piece and any segments which seem overly terse or verbose.

We found that using humans to do the reading through every time changes were made to a script was quite a time-consuming process, and so we created a tool using text-to-speech to generate computer-voiced readthroughs of scripts during development. This technique is nowhere near as good as using natural human speech, but it doesn’t have to be; the intention is to get a rough idea for how a script sounds before stepping up to ‘real’ speech. In this way, when you get to a readthrough, a lot of the small problems with a script will be resolved and you can use people’s time to resolve larger or more subtle issues with a script that machine-reading can’t catch.

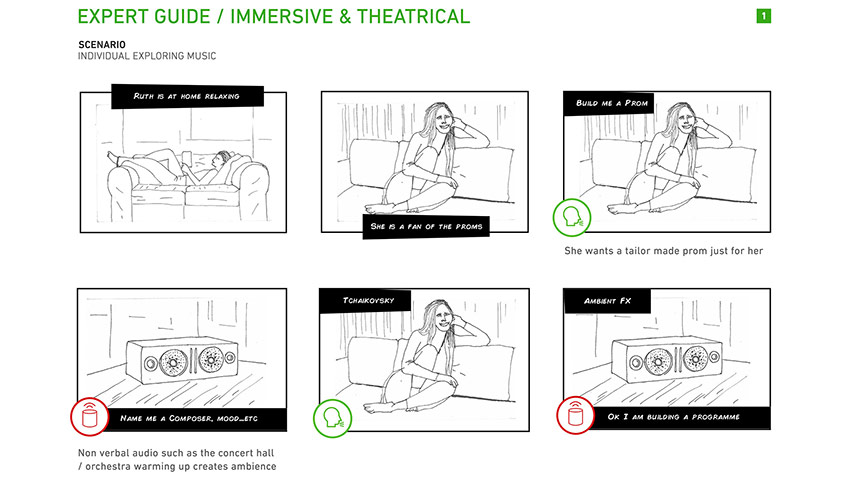

Animatics and Storyboards

Despite the focus given to language and scripting during our process, we have found that it can be useful to draw storyboards to illustrate the flow of an experience and the wider context someone is in while they use a VUI – at home, for example, or in a car.

Creating a storyboard also makes it possible to create an animatic – an animated storyboard. This is a useful artefact for illustrating how an experience plays out over time, and works well when communicating an idea outside of the design team.

Software Prototypes

The highest-resolution prototype you can make is in software – either for a VUI device itself, or a platform which allows you to try things not yet possible on VUI devices. For our collaboration with Children’s, we ended up creating two software prototypes – one which runs on Alexa and one for iOS.

The iOS prototype is of an experience in which children are invited to make animal noises during a musical story – their noises are then recorded and used in a song at the end of the experience. Since recording audio is not currently possible on Alexa or Google Home, we used iOS. However, the platform is irrelevant in this case – we’re testing the experience, not the platform – and in user testing sessions we hide the phone and use a handsfree speaker to focus attention on the sound.

The Alexa prototype is of an interactive audio episode of Go-Jetters, a geography adventure show. We originally intended to run this as a Wizard-Of-Oz prototype, with a human facilitator triggering pre-recorded snippets of audio during a user testing session. However, once we’d recorded the audio, we realised that with a little tweaking of the script, we could stitch together an Alexa skill from the pieces relatively easily. This allowed us to make a much more realistic prototype which we were able to test with a larger group of testers in their own homes by adding them to a testing group on Alexa.

Another benefit of building a prototype on the target platform itself is that it’s possible to iterate from a prototype to a releasable product – as long as you’re careful to refactor and tidy up as you go. Prototype development is not the same as production development.

Thanks!

This prototyping method is itself a prototype – we’ll be refining it as we do other prototyping projects in the future. We’d love to hear if anyone is using it outside of our team – if the method as a whole, or bits of it, are useful to you, please let us know!

Thanks to the whole Talking with Machines project team for their work on the design research which led to these posts: Andrew Wood, Joanne Moore, Anthony Onumonu and Tom Howe. Thanks also to our colleagues in BBC Children’s who worked with us on a live prototyping project: Lisa Vigar, Liz Leakey, Suzanne Moore and Mark O’Hanlon.