Steve Johnson is the founder of SeeBoundless, a company that innovates news gathering practices, rewrites university courses to include immersive storytelling, and finds new ways for audiences to learn about the world around them. Steve wrote the following paper "Using Drone Photogrammetry for News" for his DFX Engage! session at NAB Show 2019. Register for the Show to see Steve's session on Monday, April 8 from 3:20pm to 4:40pm in N260 LVCC.

Steve Johnson is the founder of SeeBoundless, a company that innovates news gathering practices, rewrites university courses to include immersive storytelling, and finds new ways for audiences to learn about the world around them. Steve wrote the following paper "Using Drone Photogrammetry for News" for his DFX Engage! session at NAB Show 2019. Register for the Show to see Steve's session on Monday, April 8 from 3:20pm to 4:40pm in N260 LVCC.

Abstract – The process of scanning large objects, locations and mapping using the photogrammetry technique was inhibited by high costs and computing power until recently. Now, with consumer electronics we can produce high quality 3D objects for use in augmented reality applications through analytical photogrammetry. This process can be done on budget and on deadline to provide a deeper level of interactivity and contextualization for consumers of news and information.

Introduction

News organizations have been pushing technological boundaries for more than a century to deliver content to audiences in new ways that increase understanding of editorial content and the value of the product.

Cost and computing power have historically been the two largest barriers to entry to photogrammetry in news production. However, news producers can now create accurate and detailed maps, objects and tools for audiences to interact with and consume all because of the advancement of consumer cameras, drones and efficient algorithmic photogrammetry software, both locally and cloud based.

Additionally, because of advancements in augmented reality (AR) platforms we can create a workflow to implement photorealistic models into news applications, websites and broadcasts.

Legally, the Federal Aviation Administration (FAA) Part 107 regulation [1], which was passed in 2016, gave news organizations and broadcasters a legal pathway to photograph and scan large objects, and buildings. It also allowed a legal way for these same organizations to create tools to map locations.

These AR applications can be applied to a variety of news stories and platforms making it easier to integrate into existing production ecosystems with cross-compatible file formats.

In 2018, more than 1.8 billion mobile devices had operating systems that include AR capabilities, ARCore (Android) and ARKit (iOS), and this number is projected to be more than 4 billion by 2020 [2]. This means that building high-quality 3D scans with drone photography in news production naturally fits into publishing and broadcasting models without drastically changing processes.

Furthermore, the tools needed to build the 3D models from photographs have become more accessible to producers and the compatibility of the 3D models increased to multiple use cases.

History

Photogrammetry is defined by the American Society for Photogrammetry and Remote Sensing (ASPRS) as “the art, science, and technology of obtaining reliable information about physical objects and the environment through processes of recording, measuring and interpreting photographic images and patterns of recorded radiant electromagnetic energy and other phenomena” [3].

The origin of which goes back to 1494, when Fra Luca Bartolomeo de Pacioli, an Italian mathematician and collaborator with Leonardo da Vinci, published Summa de arithmetica, geometria, proportioni et proportionalita (Summary of arithmetic, geometry, proportions and proportionality) [4]. This book is one of the first known accounts of proportions in mathematics and art forming the bases for the modern uses of photogrammetry to measure distances in images using scale. The practice was perfected by French scientist Aimé Laussedat in 1849. He was the first person to use terrestrial photographs in map compilation. In 1858, Laussedat experimented with aerial photogrammetry using strings of kites recognizing the value in low altitude arial photography as a tool for mapping [5]. Today, consumer tools, which utilize digital photographs using GPS metadata, have drastically changed the process but are building on the same foundations used by Pacioli, da Vinci and Laussedat.

Current photogrammetry uses analytical photogrammetry programs to input digital photographs rendering 3D photorealistic models. This analytical process is done through the mathematical calculation of coordinates of points in object space based upon camera parameters, measured photo coordinates, and ground control [6].

Although AR in news production is relatively new, it has been used in other professions since the 1990s. The New York Times on Feb. 1, 2018, debuted a standalone AR object of a newspaper honor box to introduce readers to the technology [7]. The Times then followed its AR debut with an interactive story on their iOS app for the 2018 Winter Olympics using photogrammetry to capture athletes in a competitive position [8].

In broadcast production, the use of volumetric video to produce an AR-like image to viewers on 2D screens was debuted in 2008 on CNN during their election night coverage. The network used 35 cameras to get moving volumetric image of correspondent Jessica Yellin, live from Chicago to their New York City studios. The volumetric video was then overlaid, similar to a graphic insert, where viewers could see anchor Wolf Blitzer speaking to Yellin [9]. The technology was a first for live TV but was criticized by many for the labeling of a “hologram” because Blitzer wasn’t actually seeing Yellin in studio as the volumetric video of her was overlaid by producers [10].

Nonetheless, the technology was a first and showed the industry the possibility of using 3D imagery live in studio to help anchors better interact with correspondents.

Ten years later, The Weather Channel perfected the use of interactive AR elements in broadcast in their coverage of Hurricane Florence. Meteorologist Greg Postel demonstrated the danger of rising floods on air by placing himself on flooded streets to scale. The technology for this broadcast, Unreal Engine, is normally used for video game development, but in showing audiences the effects of storm surges proved to be “more effective than numbers, or even maps” [11].

Today, we continue to see examples of AR in news and broadcast production with photorealistic models created using algorithmic photogrammetry and an increased audience understanding of the technology.

Photogrammetry Process

Object Capture

Preparing a drone-scale photogrammetry capture requires significant planning of both the object itself and the regulations of commercial drone photography.

It is best if the object has limited reflective surfaces. For example, objects with framed windows and matte surfaces perform much better in the post-processing phase than floor-to-ceiling windows.

It is imperative that you have authorization to fly under current FAA Part 107 regulations [1] and an unobstructed view of the object from all sides, both on the ground and in the air.

Currently, there are two methods to capturing drone photogrammetry models: radial capture and grid capture.

Figure 1: An example of a radial capture process

Radial capture, as demonstrated in Figure 1, requires you to fly circular patterns facing the camera and drone inward at varying altitudes to create a virtual dome over the object combined with photographs around the object from the ground.

The radial capture method works best for objects with few obstructions around it that may block your view to create a complete dome.

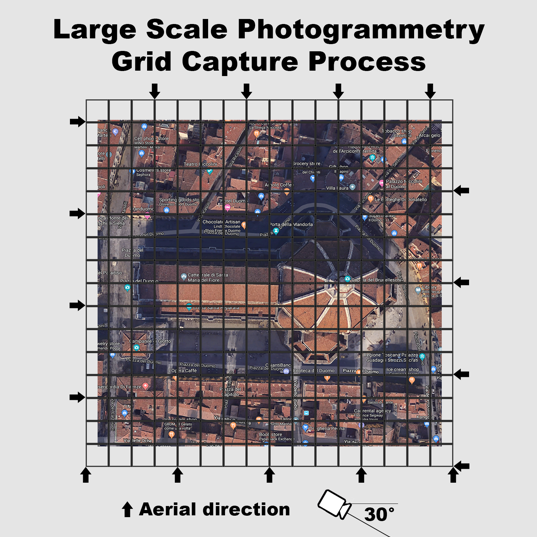

In the grid capture method, as demonstrated in Figure 2, you move across a plane with your camera angled down at approximately 30 degrees. The angle of the drone camera will allow the entire building or area to be captured unless there are large overhangs or awnings. In that case, ground images are needed.

Figure 2: An example of a grid capture process

When capturing images from the ground it is best to walk around the object and capture it with a wide angle lens (18-24mm) every 10 degrees. At each stopping point, take a minimum of three images facing perpendicular to the structure and at 45 degrees to the left and right of your direction.

If the object is tall enough to require a fourth image angled upward, then the radial method will provide a better model than grid so that you can capture the object at multiple altitudes.

Both methods should be capturing images with at least 50 percent overlay to allow algorithmic photogrammetry software to accurately build a 3D mesh model.

While each method can be effective in building a successful 3D model, it is recommended to look at every object individually and make plans accordingly. For example: If you have a structure that is complex in nature — perhaps made up of intricate archways, or with portions comprised of reflective surfaces or even sections that are hollow — then you should take extra precaution in making sure all angles have been captured.

It is also recommended that the camera being used, both on the ground and with a drone, has a sensor with no less than 8 megapixels to provide enough data for the software to create a model.

Ideal weather for large-scale drone photogrammetry is cloud coverage at least 500 ft or more above the highest altitude you plan on flying to comply with FAA Part 107 regulations [1].

A consistent cloud coverage reduces color inconsistencies, shadows and reflective glares in your photographs, which will help create a more accurate stitch in post-production.

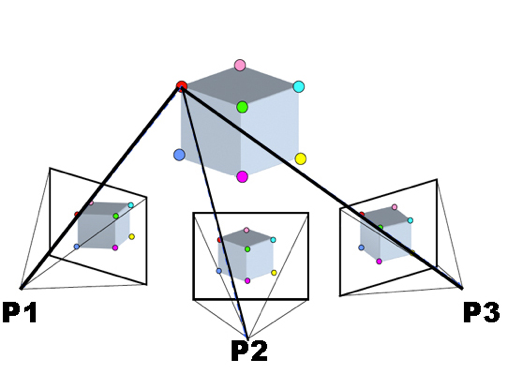

The photogrammetry software, used to create the models, relies on both metadata and contrasts in the photographs (pixels) to create point clouds, shown below in Figure 3. It recognizes angles, focal length and distance with each image to create a 3D mesh model. The software will then overlay texture onto the 3D mesh to create a photorealistic model. Depending on the size and complexity of the object, you should have between 200 and 700 images to input into the program to process.

Figure 3: A common point between multiple images [12]

Post-Production

There are two categories in which you can process your algorithmic photogrammetry model: locally and cloud-based.

Locally, there are programs such as Agisoft MetaShape (Linux, Mac OS X, Windows, AMD and Nvidia), Capturing Reality (Windows and Nvidia only) and 3DF Zephyr (Windows only) [13].

Most of the these programs rely on the still images with attached metadata (GPS, focal length and altitude) to create the 3D model. Other data such as laser scans and video can also be input with varying results and computation time.

Currently, the cloud-based option that has proven most reliable in testing is Autodesk ReCap [14]. This Windows program allows you to upload your images to their servers and has provided an average processing time of approximately two hours for a data set less than 700 images.

More expensive enterprise solutions in the cloud, such as 3DR’s Site Scan Platform [15], can perform more computationally intensive tasks such as measuring volumes of masses and setting GPS ground control points that are used in construction and agriculture.

Once the objects have been created, programs such as Blender (free for Mac OS and Windows) can be used to edit and correct errors in the 3D models.

In 2018, Adobe announced “Project Aero,” which will integrate USDZ files into their Creative Cloud suite for editing, animation and publication [16]. Adobe is promising a turnkey approach to AR creation, animation and distribution to increase the adoption of the technology.

Currently, post-production requires a content creator with an intermediate level of understanding of 3D modeling and textures. A level of understanding that is possible with online webinars and software tutorials.

Distribution

All of the algorithmic photogrammetry processing software programs allow for exporting 3D models in compatible formats for distribution. Formats most commonly exported are .3DS (3D Studio Max), .OBJ (Wavefront) .BLEN (Blender), .DAE (Collada) and .FBX (Audodesk exchange) [17].

Web-based publishers like SketchFab allow for teams to preview, edit and embed objects for desktop publication, VR viewing and AR integration.

On June 4, 2018, Apple debuted a new 3D file format, USDZ, based on Pixar’s Universal Scene Description. This allowed for easier distribution across iOS and MacOS platforms after updating their proprietary SceneKit and ARKit application programming interfaces (API) [18]. The new file format allows for a “quick look,” which enables developers to embed AR objects directly onto mobile-friendly webpages in Apple’s Safari web browser. The user will then be able to interact with the 3D object by turning on their smartphone camera and placing the object into their surroundings, thus removing the need for creating in-house applications to experience AR.

Google is also working on a web-based version of AR Core, with API allowing developers to build 3D objects in their apps and embed directly onto mobile websites using their internet browser, Chrome [19].

Mobile is not the only application for the creation of 3D objects through photogrammetry. The advancement of in-studio AR for broadcast through services like AVID’s Maestro AR, Vizrt, and Brainstorm3D’s Infinity Studio are just a few options offered to the broadcasting industry that can use 3D objects in studio.

Using camera-tracking hardware in connection with AR broadcasting software, producers can now place objects virtually in studios for talent to interact with and use as digital props in their shows.

The goal for news organizations and broadcasters being to use the 3D objects in the format that fits best editorially and on the most effective platform for audiences to build an understanding of the subject matter.

Practical Applications

While real-world examples of AR in news and broadcast are limited, the proliferation of the use of drones in the gathering of news is proving useful by easily bringing audiences to places previously deemed too difficult or costly. Creating 3D objects for AR will only continue to decrease in both cost and production time.

The large benefit of algorithmic photogrammetry programs for news organizations is the cross-compatibility of file formats for use in web-based publishing, mobile applications and broadcast.

If a news organization scans an object for use in a 3D photogrammetry program they could theoretically use the same 3D object for:

- Embedding on a web news story for desktop viewing/interactivity

- Embedding on a web news story for mobile viewing/interactivity/AR with use of smartphone camera

- Building 3D object directly into news app for AR use in iOS or Android

- Creating a digital set piece for interactivity in a live broadcast

Already, AR objects have been used in web/mobile/broadcast applications and the potential to use photogrammetry for photorealistic 3D models could help audiences further understand map-based news stories while providing context for a location.

For example: A news organization working on a series of stories on wildfires in California could scan burned locations, healthy forests, structures in high-risk areas, firefighting equipment and other elements that make up their reporting. They could then take those object scans to show scale and context for viewers across multiple platforms (web/mobile/broadcast).

However, the true value in drone photogrammetry for news is not going to be in the technology. It will be in the application of the technology within the storytelling process. While audiences may be impressed with something “new,” the pace of technological advancement is so fast in news and broadcast production that the diffusion of innovation has the potential to come quickly.

Over the last 20 years we have seen enhancements in interactive graphics, drone photography and videography, social media interactivity, HD, 4K and 8K. However, there is not much evidence to support the theory of a single technological innovation increasing value if it does not provide audiences with quality content utilizing the technology. The challenge for producers will be to find ways to bring context and create narratives around photorealistic AR for audiences.

The good news is that future platforms will become more accessible while the costs of production and computing time to create models will both decrease. This means that news organizations will be able to efficiently build photogrammetry at scale into their productions and keep libraries of scanned locations using drones for future stories.

References

- “Fact Sheet – Small Unmanned Aircraft Regulations (Part 107).” FAA Seal, 19 Sept. 2014, faa.gov/news/fact_sheets/news_story.cfm?newsId=20516.

- Bochenek, Agatha, “Reality Check: Augmented Reality and Virtual Reality Are Changing the Media Landscape.” IAB – Empowering the Marketing and Media Industries to Thrive in the Digital Economy, 11 July 2018, iab.com/news/reality-check-ar-vr/.

- ASPRS online Archived May 20, 2015, at the Wayback Machine.

- Pacioli, Luca. “Summa De Arithmetica Geometria Proportioni Proportionalita.: Continentia De Tutta Lopera”. Paganino Paganini, 1494.

- Birdseye, C.H., “Stereoscopic Phototopographic Mapping”, Annals of the Association of American Geographers, 1940, 30(1): 1-24.

- Paul R. Wolf, Ph.D.; Bon A. Dewitt, Ph.D.; Benjamin E. Wilkinson, Ph.D., “Elements of Photogrammetry with Applications in GIS, Fourth Edition,” Introduction to Analytical Photogrammetry,

- Roberts, Graham. “Augmented Reality: How We’ll Bring the News Into Your Home.” The New York Times, Feb. 2, 2018, nytimes.com/interactive/2018/02/01/sports/olympics/nyt-ar-augmented-reality-ul.html.

- Perez, Sarah. “The NYT Debuts Its First Augmented Reality-Enhanced Story on IOS.” TechCrunch, Feb. 6, 2018, com/2018/02/06/the-nyt-debuts-its-first-augmented-reality-enhanced-story-on-ios/.

- Welch, Chris. “Beam Me up, Wolf! CNN Debuts Election-Night ‘Hologram’.” Cable News Network, Nov. 6, 2008, 3:22 p.m., cnn.com/2008/TECH/11/06/hologram.yellin/index.html.

- Nowak, Peter. “CNN’s Holograms Not Really Holograms | CBC News.” CBCnews, CBC/Radio Canada, Nov. 6, 2008, cbc.ca/news/technology/cnn-s-holograms-not-really-holograms-1.756373.

- Becker, Rachel. “This Terrifying Graphic from The Weather Channel Shows the Power and Danger of Hurricane Florence.” The Verge, Sept.13, 2018, theverge.com/2018/9/13/17857478/hurricane-florence-storm-surge-flooding-the-weather-channel-video-graphics.

- Source: https://commons.wikimedia.org/wiki/File:Sfm1.jpg

- “Comparison of Photogrammetry Software.” Wikipedia, Wikimedia Foundation, 13, 2019, en.wikipedia.org/wiki/Comparison_of_photogrammetry_software.

- “PHOTOGRAMMETRY SOFTWARE.” CAD Software | 2D And 3D Computer-Aided Design | Autodesk, Redshift EN, autodesk.com/solutions/photogrammetry-software.

- “Site Scan.” 3DR, com/products/site-scan-platform/.

- “Project Aero.” Adobe Captivate-Unlock The Future of Smart ELearning Design, adobe.com/products/projectaero.html.

- “3D File Format.” Smartboard – EduTech Wiki, unige.ch/en/3D_file_format.

- “Usdz File Format Specification.” Introduction to USD, pixar.com/usd/docs/Usdz-File-Format-Specification.html.

- “Augmented Reality for the Web | Web | Google Developers.” Google, google.com/web/updates/2018/06/ar-for-the-web.